Introduction

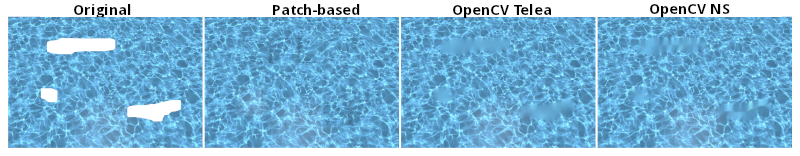

Inpainting is the task of reconstructing missing regions in an image. OpenCV implements two methods that work well to rebuild small portions of an image. For large pieces or very textured areas, these algorithms show some limitations. The first figure shows that using the built-in inpainting from OpenCV is not satisfactory compared to the patch-based texture inpainting in the case of significant gaps and with a very textured background.

Patch-based inpainting

The algorithm used for the patch inpainting is described in [1][2]. This algorithm uses image quilting to generate texture and KDTree to find the similarities between adjacent cells and fill missing image parts.

- The image is subdivided into squares of size: window_size + 2*overlap_size. The overlap regions are taken at the edge and will be used to compute similarities between adjacent cells. To get more cells, we compute horizontal and vertical mirrors. We can rotate the input image and repeat this operation to double the number of cells.

- We train a KDTree using the computed cells and their overlaps. Several trees have to be trained depending on the number of overlaps for a given cell (e.g., the first cell to place will have only top and left overlap, and the last cell will have top, bottom, left, and right overlaps).

- We finally fill the hole using a grid order. For each cell, we compute the overlap area, then use the KDTree to find a cell that will be the best match for this position. The cells can be combined using several techniques, the most common being the feathering merge. Pixels are weighted based on their distances from the edges (zero close to the border and one at the center of the cell where there is no overlap). The weighted images are then summed to perform the merging.

One difficulty can arise in the case of huge images because dividing the image into cells can take much memory. To circumvent this problem, the patch-based-inpainting library provides several parameters to reduce memory intake. One way is to select a sub-area to perform the training in the image. Another way is not to perform mirror operations and rotations on the cells. At last, we can change the step used to divide the image into cells.

Finally, the user provides the image to inpaint, several parameters like the cell and overlap size, and a binary mask where all the areas that are not zero will be inpainted.